Exploring secure software development at Glasswall: the fortification of container images

Understanding CDR (Content Disarm and Reconstruction)

In the world of cybersecurity, Content Disarm and Reconstruction (CDR) stands as a critical line of defense against malicious content. By systematically analyzing and neutralizing potential threats within files, CDR technology ensures that only safe, sanitized content enters an organization's network. At Glasswall, our zero-trust file protection solution harnesses the power of CDR to provide unparalleled security, allowing businesses to operate with confidence in the face of ever-present digital risks.

We’re excited to introduce our latest blog series dedicated to secure software development practices here at Glasswall. As a leading advocate for cybersecurity, Glasswall is committed to pioneering innovative solutions to safeguard digital environments from evolving threats. In this series, we'll delve into the core principles and methodologies that define our approach. Whether you're a prospective client considering Glasswall's services, an existing customer curious about our approach, or an external engineer seeking insights into similar problems, we hope this series will be informative for you.

Defining Cloud-Native

In today's digital landscape, the concept of cloud-native has emerged as a cornerstone of modern software development. At its core, cloud-native refers to the design and deployment of applications optimized for cloud environments. By leveraging cloud-native technologies and architectures, organizations can achieve greater agility, scalability, and resilience in their software infrastructure. As we navigate the intricacies of secure software development, understanding the principles of cloud-native becomes increasingly paramount in ensuring the robustness and adaptability of our solutions.

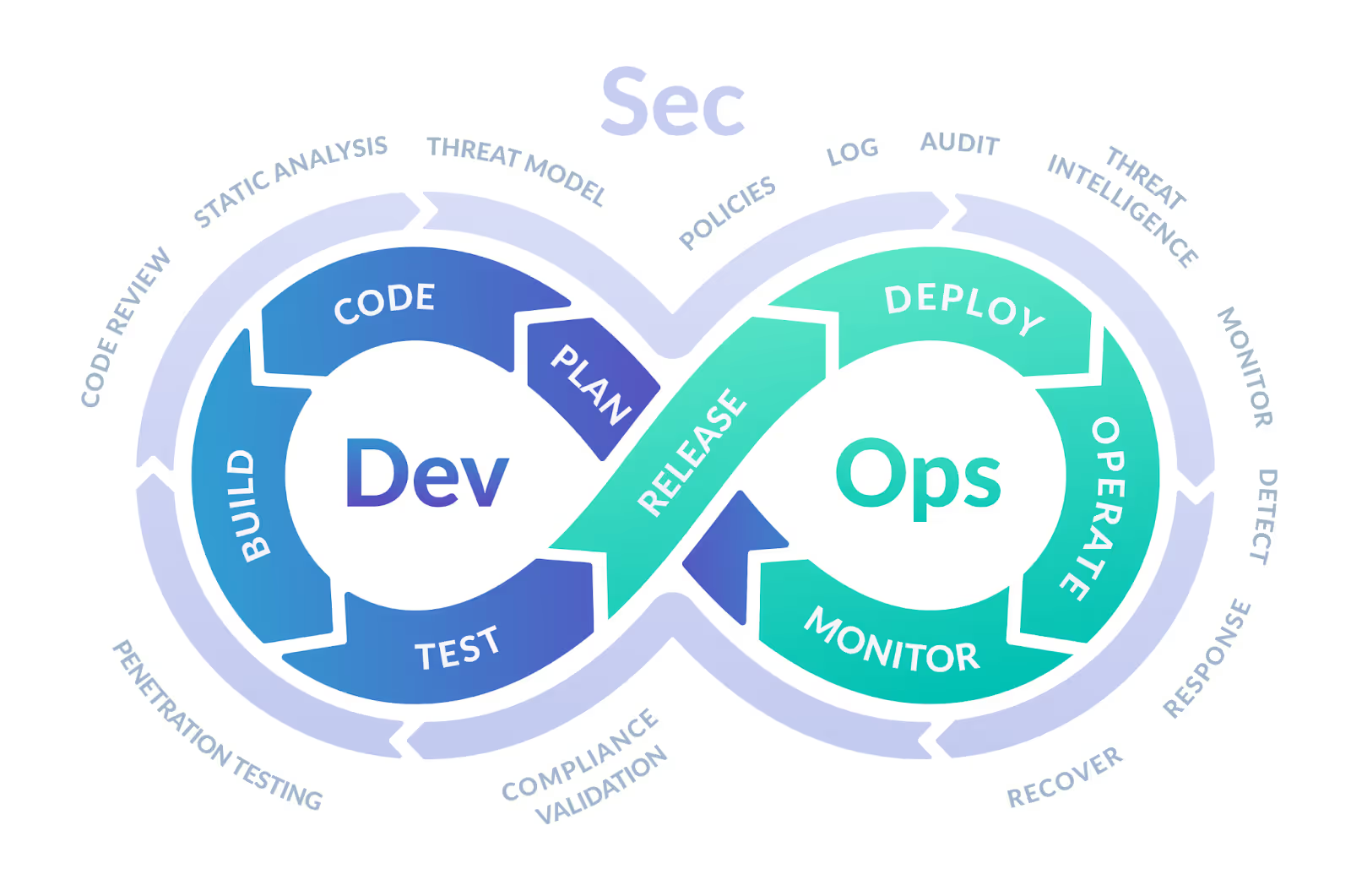

Meet the DevSecOps Team

Glasswall’s DevSecOps (Development, Security, & Operations) team is comprised of Chris Holman, our Team Lead, and Gurunatha Reddy, our seasoned Senior DevSecOps Engineer whose vision and expertise guides our efforts towards excellence. Together, they work tirelessly to innovate and elevate our security protocols.

Series Overview

Throughout this series, we'll take a high-level look at how we secure each layer of our software development lifecycle and how this enables us to deliver secure software to our customers.

In our journey working with diverse customers across multiple geographies and business verticals, including defense and government agencies, we have encountered stringent compliance requirements. Rather than perceiving these as obstacles, we see them as valuable opportunities for growth and improvement.

For example, The Software Bill Of Materials (SBOM) executive order necessitated a thorough understanding of our software components. We’ve also had to familiarize ourselves with and apply the Defense Information Systems Agency (DISA) Security Technical Implementation Guide (STIG) to our deployable operating systems. The need to deploy our solutions into offline environments led us to implement code and image signing for authenticity verification.

Additionally, we made a strategic shift in our Secure Software Development Lifecycle (SSDLC), integrating security measures at an earlier stage in the development process. These experiences have broadened our expertise within the team and empowered us to meet our clients' needs more effectively.

Fortifying container images: trimming the fat for a secure and efficient process

The pursuit of secure, efficient, and standardized deployment processes is a constant endeavor. Previously, a key area of focus has been the management of Docker (or container) images, and ensuring the process of creating container images is consistent and secure.

However, there can be issues - dealing with numerous container images, each that pull a standard version of a plain distro, which comes with default packages and excessive permissions and no common ground amongst the distribution. This can possibly open the door to manysignificant security vulnerabilities which are constantly appearing within container images - making it challenging to manage robustly.

These standard distributions are often pre-bundled with a variety of packages to provide a range of functionality out of the box. These can include basic utilities, libraries, and services such as text editors (vim, nano), network tools (netstat, ping) and server software (Apache, MySQL). Whilst these packages can be useful, they introduce unnecessary bloat and increased attack surface, especially if they are not required for the service to function.

The challenge we faced was how to streamline the process, so by default, we’re using secure images, which are heavily scrutinized, adapted, and suitability hardened. Our objective was to ensure they are secure by default, automatically available to the services that require them, and consistently updated.

The Solution

Addressing the challenge of managing numerous container images, we embarked on a journey to standardize the approach used across all our services. This was not a straightforward task and required substantial effort to ensure compatibility with the chosen distro.

With the distro standardized, we turned our attention to dissecting it. This process was guided by three fundamental questions: What is the basic functionality required, what needs to run, and who needs to run it? The answer to these questions provided us with a roadmap to start eliminating the unnecessary elements that come with all default images, thereby significantly reducing our attack surface.

Our approach included several steps, including but not limited to:

- Removing interactive shells for users, thereby limiting potential entry points for unauthorized access.

- Eliminating unnecessary user accounts and retaining only a subset of users, further reducing potential entry points and attack methods.

- Increasing the strictness of permissions across file systems and directories, adding an additional layer of security.

- Removing SUID & GUID files, kernel tunables, init scripts, crontabs and fstab, striping away potential areas for exploitation.

- Uninstalling all the unused packages to maintain minimal footprint.

- Updating the container image to use a non root user with minimal permissions.

By implementing these measures, the image created was inherently more secure than a standard version. However, we recognise that this was not a one-time fix. Container images, if not regularly updated, can become massive security risks over time.

To address this, we chose to maintain a hardened default image, and store that within our container registry. This image serves as a secure foundation that can be built upon for other services. We leveraged continuous integration/continuous deployment pipelines to maintain a catalog of hardened dockerfiles, which referenced our hardened container images, and then automated the process of building and pushing into our container registry.

This encompasses several processes: building the container image, executing secret scans against the entire image to ensure no secrets are present, and analyzing the image with a range of commercial and open-source container scanners to alert us of any underlying issues, whether within the distro itself or the services built on top. We also generate detailed SBOMs for each image that is built. Once all these steps are completed successfully, without any warnings, the image will be pushed into our container registry, making it available for services to consume.

As a further step, we’ve also adopted monitoring within our container registry, so we’re notified when a particular image tag becomes insecure, at which point it’ll be removed and replaced with an up-to-date, secured image. We maintain a posture that any image that isn’t pushed within a certain time frame, is removed and unable to be referenced further aiding our confidence that any container image that is utilized, and secure by default.

Summary

Adopting this approach has brought significant benefits to our customers. Firstly, the attack surface of our container images has been reduced dramatically, the images are clean, with all unnecessary elements stripped away. Regular updates to these images ensure they remain secure and up-to-date, and the addition of detailed SBOMs offers transparency, allowing our customers to understand the components of the images and their origins. Indeed, it’s important to note that our commitment to security is ongoing; we don’t allow ourselves, as a team, to get complacent and constantly evolve and refine our approach, staying vigilant to evolving threats and adapting when required.

Our goal is to share our experiences, solutions, and insights while raising awareness of Glasswall's security best practices. Whether we're discussing the intricacies of updating the packages or exploring the nuances of secure software development, our aim remains the same: to foster a deeper understanding of cybersecurity principles and practices.

Join us in the next installment as we examine the challenges faced when automating the DISA STIG hardening process.

.avif)