Defending the future: a guide to fortifying AI against data poisoning attacks

A technological revolution

Recent leaps in machine learning (ML) and artificial intelligence (AI) technologies have given birth to a new technological revolution – embedding AI at the forefront of modern-day life.

ChatGPT quickly became the fastest growing application in history, bringing advanced ML capabilities to the fingertips of everyday users. Yet, this represents the tip of the iceberg and it is clear there is huge appetite for these algorithm-based technologies in both commercial and private user markets.

However, like all innovative technologies, the increase in availability and use of AI and ML solutions presents a new attack surface that bad actors can look to exploit. In this context, ‘adversarial machine learning’ is the term commonly used to describe attacks against ML models.

Data poisoning attacks: what are they and why do they matter?

Any AI or ML solution is only ever effective if its training data is clean. Training data is the initial source of learning that these solutions utilize to establish their decision-making processes.

A data poisoning attack occurs when a bad actor intentionally tampers with an AI-model's training data with the goal of deceiving the AI system to make harmful or incorrect decisions.

Bad actors use vulnerabilities, such as embedding active content within a file, to inject malicious or misleading data into the data set used to train the AI model.

These modifications to the training data can corrupt the learning process of the AI system – creating bias towards the attacker’s objective, enabling them to harvest sensitive data or simply cause the AI model to create incorrect outputs.

To put this issue into context, the GPT-3 AI model cost 16 million euros to train – if its training data set had been detected as being poisoned in some way then it is likely the developers would have had to restart the entire training process from collecting raw data to training the model, costing the organization many millions more.

Types of data poisoning attack:

Data poisoning attacks can be complex and come in many shapes and sizes. These threats can be instigated from within, or outside of, an organization. In order to adequately protect against them, it is important to understand the different methods of attack.

Label poisoning:

Entities with malicious intent will try to manipulate the behaviour of an AI or ML model to introduce incorrect or harmful information into the dataset used to train it. Specifically, the labels in the training process will be poisoned in this type of attack. This content is designed to influence the model’s behaviour during the inference phase – the stage at which the model is used to make predictions on new and unseen data.

In this instance a bad actor may continuously feed the model with false information that, for example, hundreds of pictures of cars are in fact boats. Or in more serious cases, that a piece of malware is in fact a normal, harmless file.

Training data poisoning:

Here the attacker manipulates a substantial portion of the data that is used to train the AI model to make predictions and generate responses.

These attacks introduce a backdoor, for example extra pixels that are added to the edge of an image, that triggers the model to mis-classify them – reducing its effectiveness. This is especially damaging for AI-based cybersecurity products, as an attacker can fool the model into missing harmful content hidden within a file, such as malware.

By modifying much of the training data, attackers aim to significantly impact how the AI model learns – leading to incorrect patterns and associations that could completely warp the model’s ability to make correct judgements and give reasonable responses.

Model inversion attacks:

In this instance, bad actors take advantage of the responses generated by the AI model itself. Attackers propose carefully crafted queries and inputs to the AI model and then carefully analyse the responses.

The goal of this attack is to manipulate the model’s responses to trick it into revealing privileged information or insights into the dataset it was trained on. From here, the attackers can utilize this information to further compromise the AI model, or to disclose or ransom any sensitive information uncovered. Attackers are even able to reverse-engineer and construct their own version of the model.

Stealth attacks:

These attacks are also known as targeted attacks and as the name suggests they are difficult for security teams to detect. Attackers aim to introduce weaknesses and flaws at the AI model’s development and testing phase. The alterations made by the attackers are subtle and difficult to detect due to their limited impact on the algorithm.

Once the AI model is deployed for use in the real world, the attackers then manipulate the hidden vulnerabilities introduced earlier. This can have significant consequences to the solution itself and the organization it is deployed within.

Data poisoning, deep fakes and their impact on smart technologies:

Deep fakes are realistic images and videos created by AI. They are becoming ever more popular within the creative space, and large software companies such as Adobe have started to utilize them in their products to generate lifelike AI images quickly and easily for their users.

However, because these generative AI models are also created using training data, they are susceptible to data poisoning attacks that can cause serious security complications if left undetected.

Data poisoning can also be used to manipulate an AI model to exhibit specific characteristics or generate deep fakes with a particular bias. For example, a politically motivated attacker could spread misinformation on social media through a manipulated AI model.

In addition, attackers can also manipulate an AI model’s understanding of smart devices and technologies by warping their understanding of facial expressions, voice patterns and facial features. This can have serious privacy and identity implications. An attacker may attempt to trick an AI-based security system, whether that be for a physical building, piece of tech or virtual library, using voice pattern manipulation. If the training process of an AI model has been compromised, it may believe that someone other than the rightful owner controls the system – compromising their home, an organisation or sensitive data.

Data poisoning in the real world: the attack on Microsoft’s chatbot, Tay

In 2016, Microsoft launched Tay, the Twitter-based friendly AI chatbot that users could follow and tweet with. Tay was designed to tweet back to users and learn about human interactions as it went. However, almost immediately Tay was targeted by several bad actors that continuously fed the AI vulgar and politically motivated materials.

Within less than 24 hours, Microsoft had to shut down the AI model because it began tweeting racist and other seriously inappropriate messages.

While this model was not as complex as many AI systems around today, it is a good indication of the impact that tainted training data can have on an AI system.

Microsoft released the following statement about the experience:

"Unfortunately, in the first 24 hours of coming online, a coordinated attack by a subset of people exploited a vulnerability in Tay. Although we had prepared for many types of abuses of the system, we had made a critical oversight for this specific attack. As a result, Tay tweeted wildly inappropriate and reprehensible words and images. We take full responsibility for not seeing this possibility ahead of time ... Right now, we are hard at work addressing the specific vulnerability that was exposed by the attack on Tay.

... To do AI right, one needs to iterate with many people and often in public forums. We must enter each one with great caution and ultimately learn and improve, step by step, and to do this without offending people in the process. We will remain steadfast in our efforts to learn from this and other experiences as we work toward contributing to an Internet that represents the best, not the worst, of humanity."

How to protect an AI model against data poisoning:

For complex AI models with large training data sets, it is significantly more challenging to address issues after a detected attack. Correcting a corrupted model involves complete and intricate analysis of its data sources, training inputs and security mechanisms; for large data sets this is often impossible to do.

Like most successful cyber defence strategies, prevention is the best approach to combating data poisoning. Below we have detailed a best practice guide to protecting against data poisoning attacks.

However, it is important to remember that these methods should be part of a broader security strategy, following a ‘defence in depth’ approach. It is essential to stay informed about new attack vectors and continuously update your defence mechanisms. Regularly auditing and validating your datasets and models is crucial to maintaining the integrity of your ML application.

Artificial Intelligence security: our best practice recommendations:

1. Data sanitization:

Ensure that sensitive information, personally identifiable information (PII), risky content within a file, or any other data that could pose a risk if exposed or misused is removed. Our recommended data sanitizations actions include:

Outlier Detection: Identify and remove outliers or anomalies in your dataset. These could be instances of maliciously injected data.

Anomaly Detection Techniques: Utilize machine learning techniques, such as clustering, adversarial training or anomaly detection algorithms to detect unusual patterns or instances in the data.

Data Validation: Implement strict data validation checks to ensure that the incoming data adheres to predefined rules and standards. Reject any data that does not meet these criteria. We recommend that organizations utilize our industry-leading, patented zero-trust Content Disarm and Reconstruction (CDR) technology to validate, rebuild and remove risky elements from a file, in line with the manufacturer’s recommended file specifications.

2. Data Pre-processing methods:

Data pre-processing is a crucial step in the data analysis and machine learning pipeline. It involves cleaning, transforming, and organizing raw data into a format that is suitable for analysis or model training. The primary goals of data pre-processing are to enhance the quality of the data, improve its compatibility with the chosen analysis or modelling techniques, and facilitate the extraction of meaningful insights:

Data Augmentation: Generate additional training samples by applying random transformations to the existing data (e.g., rotation, flipping, cropping). This can help diversify the dataset and make it more resistant to poisoning attacks.

Noise Injection: Introduce random noise to the training data to make it more robust against poisoning attempts. This can involve adding tiny amounts of random values to the features.

Feature Scaling and Normalization: Standardize the scale of features to prevent attackers from exploiting variations in feature scales. This can be done through techniques like Z-score normalization.

Cross-Validation: Use cross-validation techniques to split your dataset into multiple folds for training and testing. This helps ensure that your model generalizes well to unseen data and is less susceptible to overfitting on poisoned instances.

Data Diversity: Ensure that your training dataset is diverse and representative of the real-world scenarios the model will encounter. This can make it harder for attackers to manipulate the model by biasing it toward specific conditions.

3. Regular monitoring:

Ensure measures are put in place to monitor the behaviour of an AI model and any incoming data over time, including:

Model Performance Monitoring: Continuously monitor the performance of your model in real-world scenarios. If there is a sudden drop in performance, it might indicate the presence of poisoned data or other problems such as concept drift.

Data Drift Detection: Implement mechanisms to detect changes in the distribution of incoming data over time. Significant changes might suggest an attempt to poison the model.

4. Secure data handling:

To protect the training data from unauthorized manipulation, it is important to establish strict access controls and security measures such as secure data storage, encryption, and access control tools:

Data Encryption: When transmitting or storing sensitive data, use encryption to protect it from unauthorized access.

Access Controls: Implement strict access controls to limit who can modify or access the training data.

File Hashing & Data Validation: Syntactic and semantic validation of data passing across a trust boundary will help to ensure that data is not manipulated or poisoned during transfer. Finger-printing of files ensure that a chain of custody is preserved.

5. Robust model design:

Vendors should ensure that model robustness is a focus within the product development process right from the start of project inception all the way through to the delivery phase. AI and Machine Learning models should be designed to mitigate against adversarial attacks by using techniques such as:

Model Architectures: Design model architectures that protect against adversarial attacks. This includes architectures with built-in defences against adversarial inputs, such as robust optimization algorithms, adversarial training, ensemble methods, defensive distillation, or feature squeezing.

Robustness Testing & Read-Teaming: Teams should test their model's robustness against diverse types of input variations and continually assess its performance in the presence of manipulated data.

Continuous Monitoring and Updating: It is also important to establish a system for continuous monitoring of the model's performance and to regularly update an AI or Machine learning model with new data, while retraining it to adapt to any changing conditions.

6. Limit human error:

Limiting human error when building AI is critical to ensure the performance, accuracy, and ethical use of AI models. Human errors in design, implementation or training can introduce biases, compromise security and privacy, and hinder the robustness of models.

Robust training procedures: can help ensure that the training process is resilient to attacks by using secure environments for training, verifying the integrity of training data sources, and implementing protocols for managing the training pipeline.

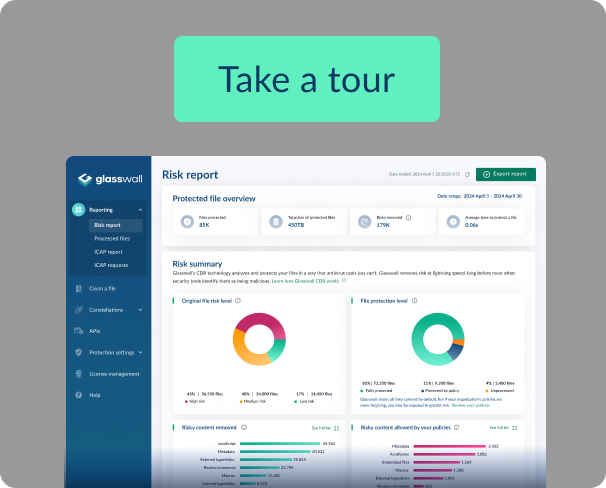

Zero-trust CDR: a security strategy best practice

The deployment of a zero-trust Content Disarm and Reconstruction (CDR) solution, such as our Glasswall CDR technology, should be a priority for security teams that are looking to protect their organization against complex file-based threats – like the ones encountered in a data poisoning attack.

As discussed above, bad actors can use a variety of techniques to poison how an AI model is trained and affect how it functions. CDR helps to eliminate a number of the attack vectors utilized by bad actors - including malware, inconsistent metadata and corrupted data structures that can lead to inconsistent and undesirable results. Data within files can be validated to ensure that this complies with what is permitted to be incorporated into the learning. The CDR process can help to preserve a chain of custody to prevent poisoning of data at both the structural and visual level.

Unlike traditional antivirus solutions that can only protect against what they have seen or observed before, our patented 4-step approach rebuilds files back to their manufacturer’s known-good specification:

Once our zero-trust CDR technology has completed its 4-step process, the Glasswall Embedded Engine generates a file that is free from threats and is accompanied by an in-depth analysis report. This report informs security teams on the risks identified and how our CDR technology addressed them.

We supply our zero-trust file protection solutions to organizations across the world, including members of NATO, the Five Eyes Alliance and AUKUS. Our CDR technology is infinitely scalable and helps users to comply with initiatives such as the NCSC's Pattern for Safely Importing Data, the NSA's Raise the Bar initiative and the NIST Risk Management Framework by the US Department of Commerce.

In addition, our CDR technology can be deployed to satisfy a wide variety of use cases, including Cross Domain Solutions, File Upload Portals and more.

Find out more about our use cases

.png)